The past few days, I’ve been playing with Quartz in a .NET Core application. My goal was to create a scheduler that could trigger other services via those services having an API.

A while back, I wrote about a distributed compute system. I thought this was a pretty neat concept, but it’s a bit much if all you’re wanting to do is schedule jobs. The key concepts that I wanted to use from that project, though, was to host all of my services as Kestrel process that have a simple API. The API isn’t important except to know that those services have a trigger endpoint.

Integrating this concept of having a master scheduler with something like Quartz seemed like a natural thing to do. Quartz already has a database back-end that can be used to create/read/update/delete jobs and triggers. Quartz partly works by serialzing the class definitions that are associated with a trigger so that when the trigger’s information is deserialized, Quartz knows what to do with it. For my services, then, they can easily register if they all register their triggers using the same base utility classes.

To that end, basically, all I really needed to do is create the scheduler service (Kestrel process) with the proper hooks into Quartz and to have a standard way to call the registered services. Eventually, I’ll have a front-end UI that allows a user to modify the create jobs/triggers for a nice cohesive service management layer.

Since I’m using Kestrel processes, I have the typical Startup.cs in my scheduler. I’ll be using a simply IAppBuilder extension to attach my Quartz hooks at runtime. It will simply define jobs/triggers through Quartz and those standard jobs will use HttpClient to call the requisite services’ “start work” endpoints.

The IApplicationBuilder extension contains a pretty basic configuration for Quartz to use the AdoJobStore:

public static class QuartzExtension

{

public static void UseQuartz(this IApplicationBuilder app)

{

var config = (Startup.ServiceProviderFactory.ServiceProvider.GetService(typeof(IConfiguration)) as IConfiguration);

var connStr = config.GetConnectionString("Quartz");

var properties = new NameValueCollection

{

// json serialization is the one supported under .NET Core (binary isn't)

["quartz.serializer.type"] = "json",

["quartz.scheduler.instanceName"] = "DotnetCoreScheduler",

["quartz.scheduler.instanceId"] = "instance_one",

["quartz.threadPool.type"] = "Quartz.Simpl.SimpleThreadPool, Quartz",

["quartz.threadPool.threadCount"] = "5",

["quartz.jobStore.misfireThreshold"] = "60000",

["quartz.jobStore.type"] = "Quartz.Impl.AdoJobStore.JobStoreTX, Quartz",

["quartz.jobStore.useProperties"] = "false",

["quartz.jobStore.dataSource"] = "default",

["quartz.jobStore.tablePrefix"] = "QRTZ_",

["quartz.jobStore.driverDelegateType"] = "Quartz.Impl.AdoJobStore.SqlServerDelegate, Quartz",

["quartz.dataSource.default.provider"] = "SqlServer-20",

["quartz.dataSource.default.connectionString"] = connStr

};

#if NETSTANDARD_DBPROVIDERS

properties["quartz.dataSource.default.provider"] = "SqlServer-41";

#else

#endif

After the configuration, things get interesting. When Scheduler.Start() is called, any jobs/triggers that exist int he JobStore start. On first run, though, we want to check to see if our jobs exist. If they don’t, we create them. You can see below I’m creating (2) CRON jobs. One will call an API endpoint of the service in question to request status, and the other will call another API endpoint to start the service’s work/task.

var schedulerFactory = new StdSchedulerFactory(properties);

var scheduler = schedulerFactory.GetScheduler().Result;

scheduler.Start().Wait();

var startJobKey = new JobKey("StartService");

var startJobTriggerKey = new TriggerKey("StartServiceCron");

var checkStatusJobKey = new JobKey("GetStatus");

var checkStatusTriggerKey = new TriggerKey("GetStatusCron");

// Get/Create start service job

var callServiceJob = scheduler.CheckExists(startJobKey).Result ?

scheduler.GetJobDetail(startJobKey).Result :

JobBuilder.Create<StartServiceJob>()

.WithIdentity(startJobKey)

.Build();

// Run every 10 seconds with no offset

var callServiceTrigger = scheduler.CheckExists(startJobTriggerKey).Result ?

scheduler.GetTrigger(startJobTriggerKey).Result :

TriggerBuilder.Create()

.WithIdentity("StartServiceCron")

.StartNow()

.WithCronSchedule("0/10 0/1 * 1/1 * ? *")

.Build();

if (callServiceJob == null || callServiceTrigger == null)

{

if (callServiceJob == null)

{

scheduler.ScheduleJob(callServiceTrigger).Wait();

}

else

{

scheduler.ScheduleJob(callServiceJob, callServiceTrigger).Wait();

}

}

// Get/Create status check job

var checkStatusJob = scheduler.CheckExists(checkStatusJobKey).Result ?

scheduler.GetJobDetail(checkStatusJobKey).Result :

JobBuilder.Create<GetStatusJob>()

.WithIdentity(checkStatusJobKey)

.Build();

// Run every 20 seconds with no offset

var checkStatusTrigger = scheduler.CheckExists(checkStatusTriggerKey).Result ?

scheduler.GetTrigger(checkStatusTriggerKey).Result :

TriggerBuilder.Create()

.WithIdentity(checkStatusTriggerKey)

.StartNow()

.WithCronSchedule("0/20 0/1 * 1/1 * ? *")

.Build();

if (callServiceJob == null || callServiceTrigger == null)

{

if (checkStatusJob == null)

{

scheduler.ScheduleJob(checkStatusTrigger).Wait();

}

else

{

scheduler.ScheduleJob(checkStatusJob, checkStatusTrigger).Wait();

}

}

}

}

Suffice it to say that IJob classes that I create (StartServiceJob and GetStatusJob) implement the IJob interface and simply call the API endpoints. At this point, those endpoints are little more than ping endpoints. But the services, as I mentioned above, will eventually do real work when their API’s are accessed in a similar fashion to the distributed compute example. I’ll eventually put the sample that I’ve been working with on github, but it’s not quite ready to toss out their yet.

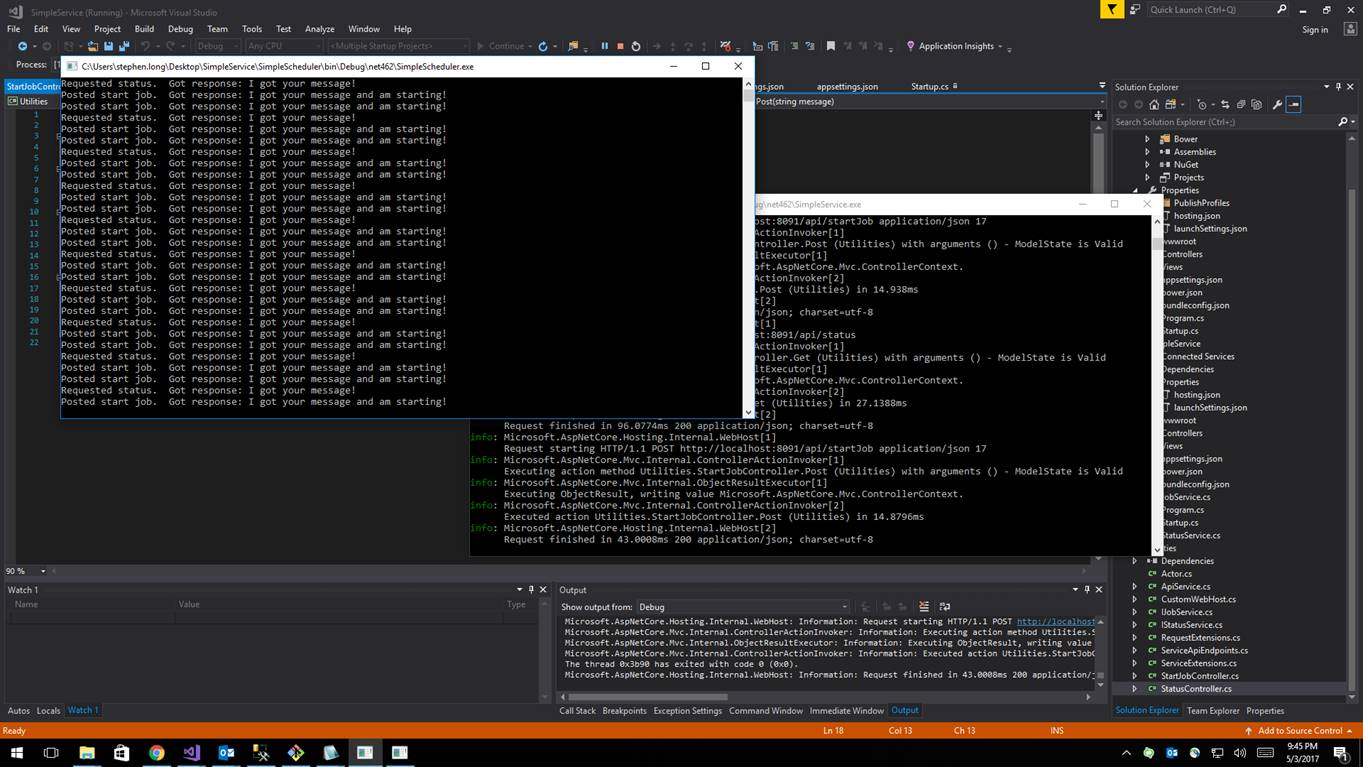

The sample scheduler plus the one service that I have running produce output showing requests and responses at this point and not much else.